Last year, our group was tasked with developing a MicroStrategy dashboard that was to show Daily, Weekly, and Monthly views of the business from 5 different perspectives. The dashboard was to contain 30 reports each with about 3 to 15 rows of data and 10 columns. The dashboard was to run nightly and needed to be e-mailed to about 75 executives. This was THE report for the enterprise. Every solution we came up with was developed all the way through and tested only to find out that there would be file size and report performance problems. This article will describe each approach, and will finish with the selected solution.

Our initial approach was to attempt to provide the most pleasant user experience possible by building an interactive Flash dashboard. For two weeks we worked through the dashboard development, layering panel after panel, placing report upon panels at various levels, and manually formatting each and every pixel. The end product was both interactive and pleasing on the eyes. We thought we were merely steps away from completion. We then began testing our product by emailing the dashboard through Narrowcast. We quickly noticed that the dashboard e-mail was 2 MB, and took about 10-15 seconds to load. The size of the e-mail was a bit larger than we would have liked, and 10-15 seconds of wait time was unacceptable. The solution was quickly dumped, and we started to look at our options.

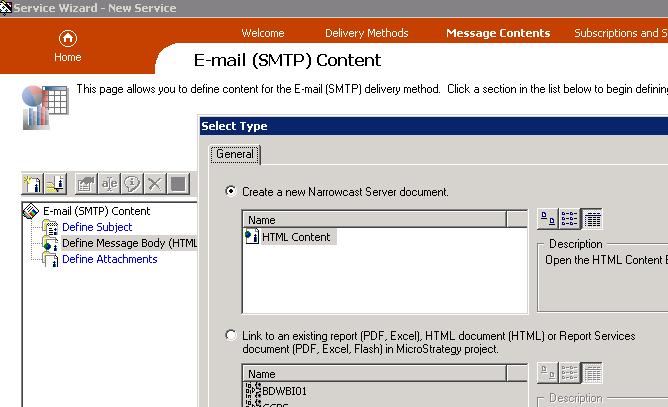

Excel or PDF attachments are always a great option when narrowcasting reports, and the size of these documents are very efficient. However, this dashboard was required to be viewed directly from the e-mail mainly because of the audience that it was built for. Shown below are the options in Narrowcast for what can be included in the body of an e-mail.

Our next option was to use Report Service documents, and if that failed we would try out HTML document solutions. Both options were tried, and although they did deliver the reports in an acceptable format, the file size of the dashboard was still between 1 and 2 MB. The problem with load time had been solved, but the dashboard was still just a bit larger than desired. (a Narrowcasted excel attachment of the reports only totalled 100kB)

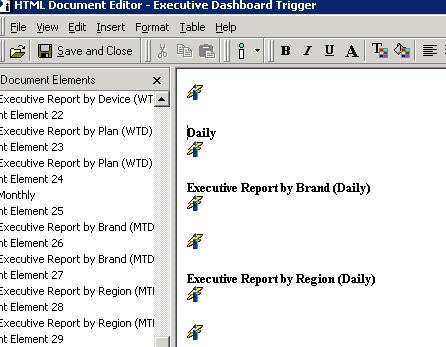

It was at this time that we started working directly with the Narrowcast server document (the first option in the picture above). What we found is that server documents are very easy to create. Basically they involve inserting grid reports onto a blank template as show below:

The end result was a very nicely formatted dashboard/e-mail with a file size of around 800kB.

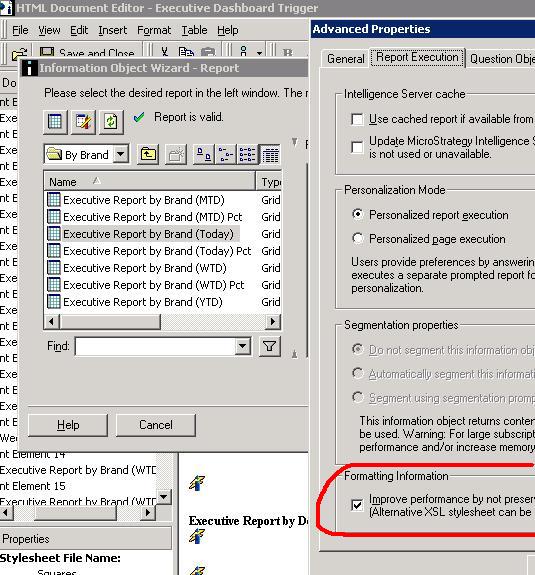

Although we could have lived with this result, we still felt there was room for improvement. We knew from experience with other BI tools that similar e-mailed reports were coming through at half the size, so we wanted to investigate a little bit more. What we found were 2 more settings that could be applied. First off, under the Advanced Properties of each document object, there was a setting to ‘Improve performance by not preserving the grid reports formatting’, as shown below:

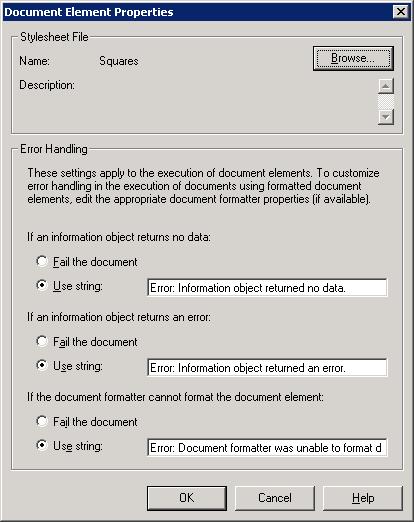

Next from each report object’s Document Element Properties, there is a place to specify your own style sheet, which your Narrowcast server should have access to. It is this setting that will give your report more formatting then just text. See the picture below that shows we picked the stylesheet called ’Squares’.

With these changes, we were able to get the e-mail down to 400kB, and acceptable size, and even a bit better than we expected. I realize this is alot of detailed technical information, but hopefully, this case study shows the various options of emailing with the Narrowcast server. If the report does not need to be directly viewable in the e-mail’s body, then attachments are definitely the best way to go, but otherwise, I’m pretty sold on using Narrowast Server documents with style sheets.