Historical master data attributes are often specially handled when designing an Enterprise Data Warehouse (EDW). On most BI projects I’ve been on, there is an exercise of sitting with the business in order to determine which attributes are required to be stored historically. Once this is complete, the requirements are then sent off to the Data Warehouse team for design and development. How this solution gets architected may be one of the determining factors on whether your project is successful or not, and as a BI team member, sometimes getting your two cents can make your life that much easier.

In the past, I’ve come across three different designs for handling historical master data attributes, with one of them standing out above the rest. The first design involves placing historical attribute fields directly on to fact tables. Please remember we are talking about historical attributes that are master data related, and not historical attributes that are related directly with transactions. The major issue with this comes about during validation and warehouse maintenance if data problems exist. In order to validate that ETL processes are working properly, one now has to validate every row in fact table to make sure that the historical attribute is correctly derived. Also, because this attibute is stored at the fact level, we now have to add this attribute to any new fact tables when needed. If, for any reason, problems are found in the historical attribute derivation, then the fact tables have to be dropped and reloaded, then re-validated. Simply put, this solution should not be used if it can be avoided.

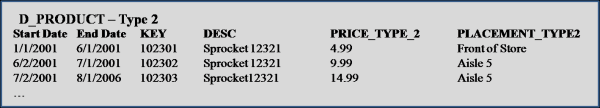

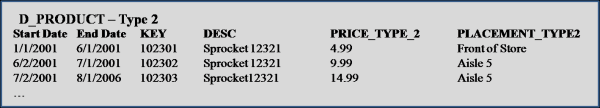

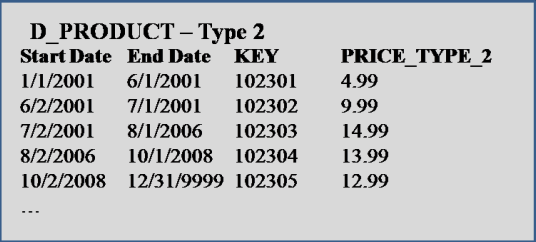

Another design which is quite popular is the use of time based, type 2 dimensions. This solution involves storing attributes, along with the date range for which those values are valid. So for example:

This solution works just fine in conjunction with a fact table. To pull the current value of an attributes, we only need to pull the latest record, usually marked with an end date of ‘12/31/9999′. The only issues that come about with this approach is one, if you store more than one historic attribute on a dimension, then reporting history of those attributes without joining to a fact table cannot be accomplished. From our example diagram above, notice that the Price Type field is accurately showing history, but Placement Type contains an extra record showing Aisle 5 starting on both 6/1/2001 and 7/1/2001. One solution for avoiding this, is to store the Previous values for every historic attribute, and then only pull historic data for an attribute if the Prev value <> Current value. The second issue often comes about when ETL is used to create history in the warehouse. This may be the only possible solution, but allowing this can ’open up a can of worms’, and now makes validation a much more difficult process.

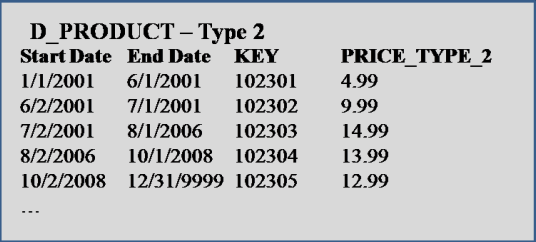

The recommended solution here actually involves setting rules to the type 2 dimension just discussed. First off, only track the history of one historic attribute per dimension table.

This simplifies the ETL and data storage designs, and also eliminates the confusion when you have more than one historical attribute on a table. Storing the data in this way not only seems more natural, but also simplifies the validation and maintenance of the historical data. If a problem is found, then quite simply, fix that problem in one place rather than dealing with more complex ETL procedure used to track multiple historic fields on one table. Second, don’t use ETL to create history if it can be helped. ETL created history only starts at the time that the ETL script is implemented, so you never get a full view of history unless it was implemented from the beginning. Also, ETL created history is difficult to validate, and in the case that problems arise, fixing that history can also be a problem. Of course, every implementation is different, and you may not have a choice. All of the information in this article has been tested thoroughly through implementation via MicroStrategy 8.1.